“AI makes up stuff. Don’t trust it.” Is a common criticism you’ll hear, dismissively exclaimed, especially by anti-AI critics. As you all likely already know by now, they are referring to Hallucinations.

While it’s true that this has been known to happen, it is also true that in the past 2 years, this issue has reduced considerably. It is not a given that these errors will always be a case.

Until now, the workaround from a user’s standpoint has been to cross-check the info generated. Or apply the Chain-of-thought (CoT) method, a prompt engineering technique that simply asks the AI to break down step-by-step how it’s coming up with the answer. In other words, guiding it through reasoning.

OpenAI’s latest model (o1), priced at $200/m, was developed to reason by itself. Users were given a window of its thinking process. Basically, looking under the hood.

They called this a reasoning model. The next evolution. The baby had grown into a teenager. It could “think logically” now, and you could read its thoughts.

This essentially eliminated the need to prompt “chain of thought” as it was already baked into its system (at this tier level of service) along with the ability to combine internet search to its output.

Quality of use strategically tucked away behind a paywall. Why? because it was so very dearly expensive to run, they said. We don’t work in the kitchen, after all. So what do we know? We accepted. The big boys & girls had spoken.

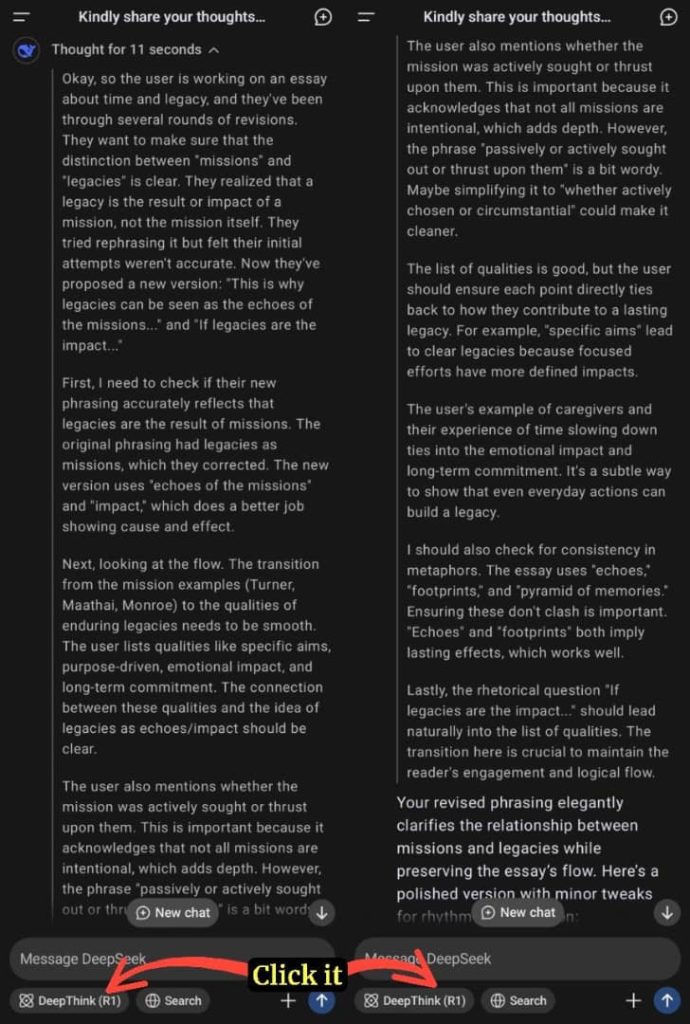

Up until…DeepSeek!

“Nada Paps, we got y’all,” said DeepSeek.

What DeepSeek did was offer a reasoning model baked in with internet search for free. With unlimited use. Opening up the space to a whole flurry of questions, feeding into what some had suspected… that though expensive, the pioneering AI companies had ballooned the cost it took to run these things. Had the Big AI companies formed a cartel and conspired to milk the public and investors? Maybe not.

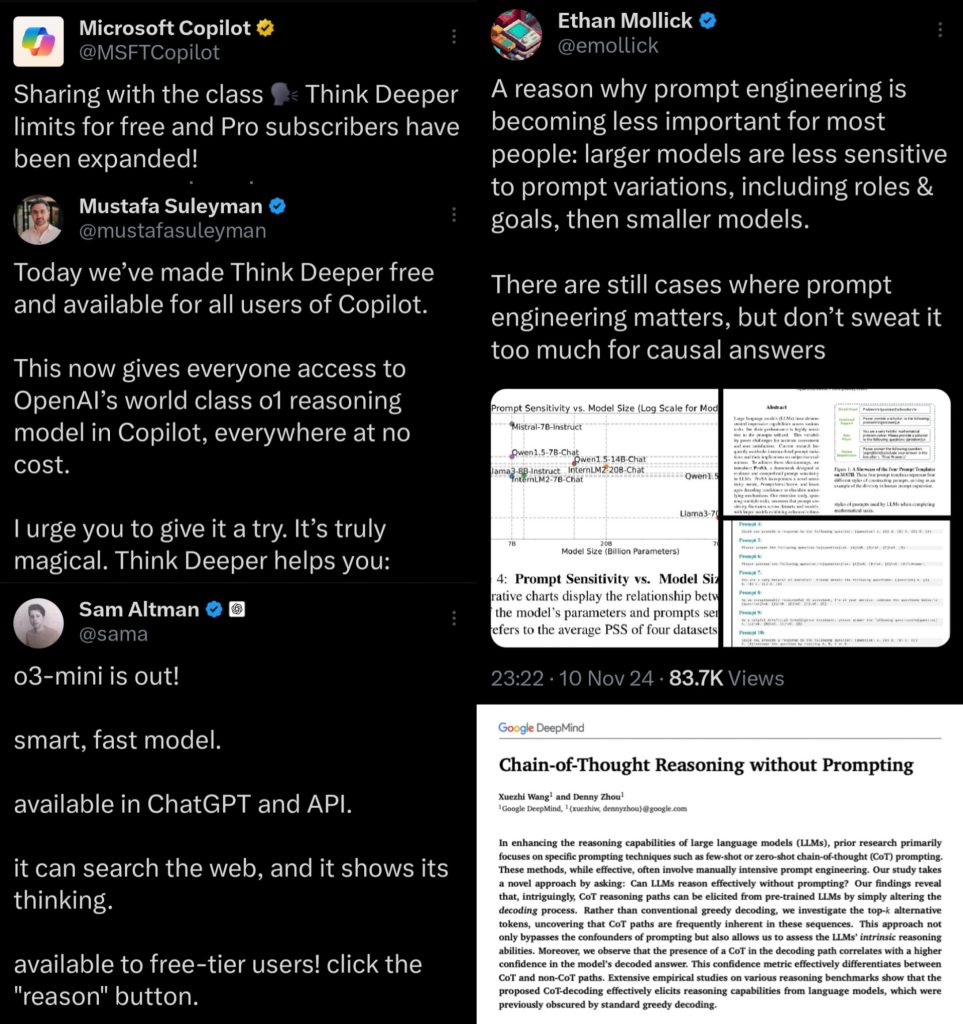

What has followed is an opening up of services. Others have begun opening up reasoning capabilities to the masses. Somehow, they can suddenly afford it.

But, the advancement of reasoning models also brings to question the longivity of prompt engineering as an essential skill. Should everyone learn it? I doubt it.

It’ll likely be reserved to very specialised sectors as the goal is to do away with technical knowledge in operations. In the past 2 years, a lot of formulas have become redundant, like the assigning of roles to AI. You no longer need to do that to get a good result. Sometimes, the AI does the prompting for you even by rewording your prompt internally so as to facilitate better results. Ideally, the approach to using LLMs will be one of good human communication skills, as natural as communicating with your colleagues.

What’s sure, the future is unpredictable. Our worst worries might never be realised, maybe in the end, we’ll all be alright.

Or not. Trade wars and AI policies might get uglier. We’ll see what happens.

For now, enjoy the freebies.

This post was created with our nice and easy submission form. Create your post!